UK's mass facial-recognition roll-out alarms rights groups

Outside supermarkets or in festival crowds, millions are now having their features scanned by real-time facial-recognition systems in the UK -- the only European country to deploy the technology on a large scale.

At London's Notting Hill Carnival, where two million people are expected to celebrate Afro-Caribbean culture over Sunday and Monday, facial-recognition cameras are being deployed near entrances and exits.

The police said their objective was to identify and intercept wanted individuals by scanning faces in large crowds and comparing them with thousands of suspects already in the police database.

The technology is "an effective policing tool which has already been successfully used to locate offenders at crime hotspots resulting in well over 1,000 arrests since the start of 2024," said Metropolitan Police chief Mark Rowley.

The technology was first tested in 2016 and its use has increased considerably over the past three years in the United Kingdom.

Some 4.7 million faces were scanned in 2024 alone, according to the NGO Liberty.

UK police have deployed the live facial-recognition system around 100 times since late January, compared to only 10 between 2016 and 2019.

- 'Nation of suspects' -

Examples include before two Six Nations rugby games and outside two Oasis concerts in Cardiff in July.

When a person on a police "watchlist" passes near the cameras, the AI-powered system, often set up in a police van, triggers an alert.

The suspect can then be immediately detained once police checks confirm their identity.

But such mass data capture on the streets of London, also seen during the coronation of King Charles III in 2023, "treats us like a nation of suspects", said the Big Brother Watch organisation.

"There is no legislative basis, so we have no safeguards to protect our rights, and the police is left to write its own rules," Rebecca Vincent, its interim director, told AFP.

Its private use by supermarkets and clothing stores to combat the sharp rise in shoplifting has also raised concerns, with "very little information" available about how the data is being used, she added.

Most use Facewatch, a service provider that compiles a list of suspected offenders in the stores it monitors and raises an alert if one of them enters the premises.

"It transforms what it is to live in a city, because it removes the possibility of living anonymously," said Daragh Murray, a lecturer in human rights law at Queen Mary University of London.

"That can have really big implications for protests but also participation in political and cultural life," he added.

Often, those using such stores do not know that they are being profiled.

"They should make people aware of it," Abigail Bevon, a 26-year-old forensic scientist, told AFP by the entrance of a London store using Facewatch.

She said she was "very surprised" to find out how the technology was being used.

While acknowledging that it could be useful for the police, she complained that its deployment by retailers was "invasive".

- Banned in the EU -

Since February, EU legislation governing artificial intelligence has prohibited the use of real-time facial recognition technologies, with exceptions such as counterterrorism.

Apart from a few cases in the United States, "we do not see anything even close in European countries or other democracies", stressed Vincent.

"The use of such invasive tech is more akin to what we see in authoritarian states such as China," she added.

Interior minister Yvette Cooper recently promised that a "legal framework" governing its use would be drafted, focusing on "the most serious crimes".

But her ministry this month authorised police forces to use the technology in seven new regions.

Usually placed in vans, permanent cameras are also scheduled to be installed for the first time in Croydon, south London, next month.

Police assure that they have "robust safeguards", such as disabling the cameras when officers are not present and deleting the biometric data of those who are not suspects.

However, the UK's human rights regulator said on Wednesday that the Metropolitan Police's policy on using the technology was "unlawful" because it was "incompatible" with rights regulations.

Eleven organisations, including Human Rights Watch, wrote a letter to the Metropolitan Police chief, urging him not to use it during Notting Hill Carnival, accusing him of "unfairly targeting" the Afro-Caribbean community while highlighting the racial biases of AI.

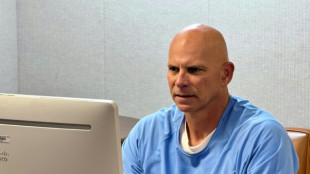

Shaun Thompson, a 39-year-old black man living in London, said he was arrested after being wrongly identified as a criminal by one of these cameras and has filed an appeal against the police.

B.Groebner--SbgTB